The Loss of Control Playbook: Degrees, Dynamics, and Preparedness

Read the full paper here.

Loss of control (LoC) over advanced AI systems has rapidly become a focal point for legislators, regulators, and industry leaders. LoC appears in California Senate Bill 53, the AI Risk Evaluation Act, introduced by Senators Josh Hawley and Richard Blumenthal, as well as in the EU AI Act’s General-Purpose AI Code of Practice, and in the frontier safety policies and system cards of select frontier AI companies. Despite this institutional attention, decision‑ and policymakers are being asked to act in the absence of an actionable, shared understanding of what “loss of control” concretely denotes.

Our research report, ‘The Loss of Control Playbook: Degrees, Dynamics, and Preparedness,’ addresses this gap. The report aims to make LoC conceptually tractable and operationally useful, so that governments and organizations can start adequately preparing for national security and societal threats from advanced AI systems today.

The report does so in three steps:

- It proposes a novel taxonomy of LoC;

- It introduces a practical governance framework that focuses on mitigations that can be actioned today; and,

- It analyses the long‑term dynamics that could lead society into a precarious “state of vulnerability” to LoC and the resulting consequences for societal resilience and national security.

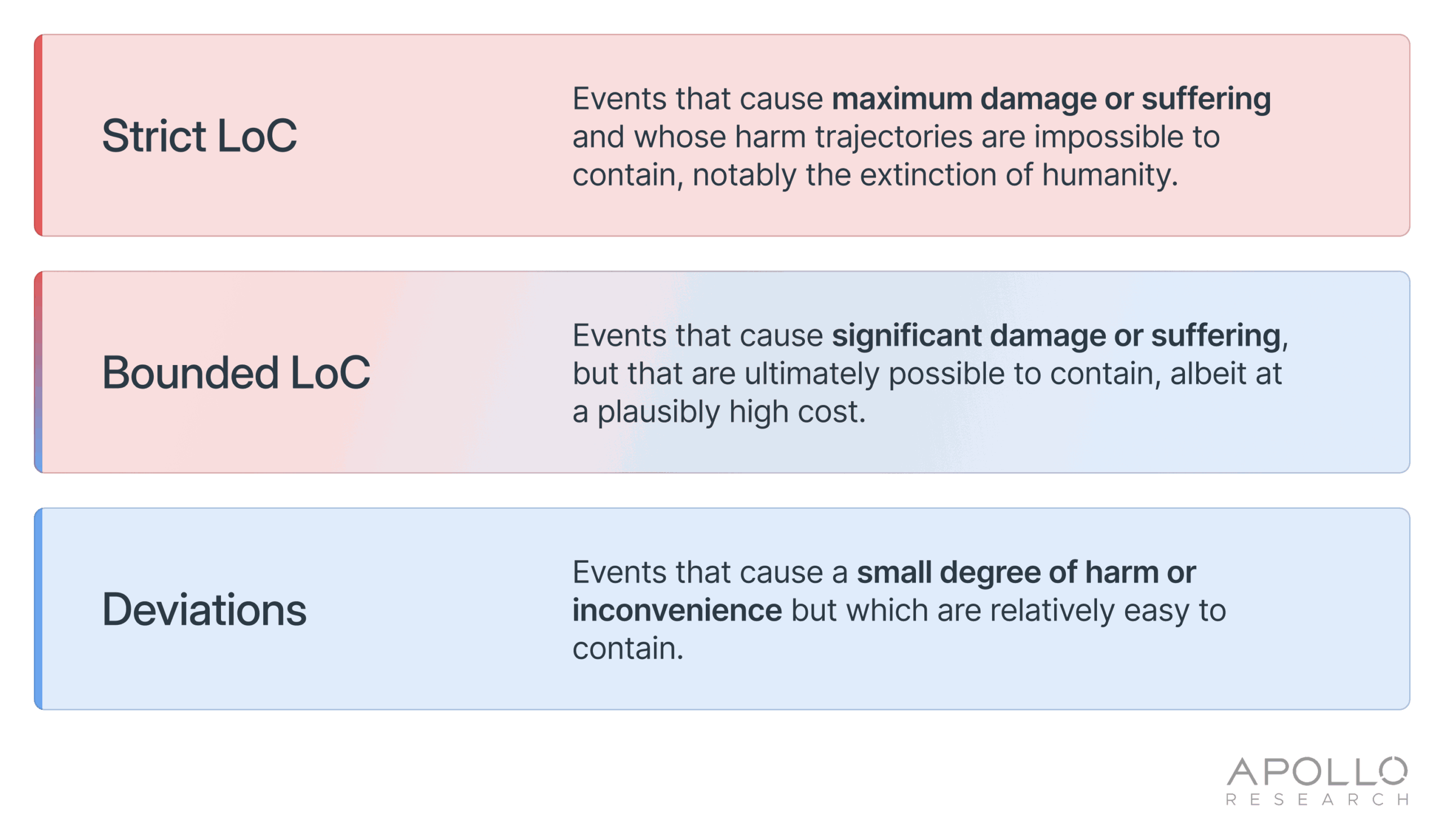

A novel taxonomy for Loss of Control (LoC): Deviation, Bounded LoC and Strict LoC

First, the report assesses existing definitions of LoC in AI literature, as well as in other safety-critical industries such as aviation, nuclear, and cybersecurity, to arrive at a common conceptualization of LoC. However, we learnt that existing definitions of LoC are diverse. Some focus on loss of reliable direction or oversight; others emphasize situations in which there is no clear path to regaining control. Some implicitly include failures that are already occurring in current systems, while others implicitly limit LoC to scenarios involving highly advanced or even superintelligent AI. Definitions in other safety‑critical sectors are equally heterogeneous and often map poorly onto the distinctive, agentic role AI systems might play.

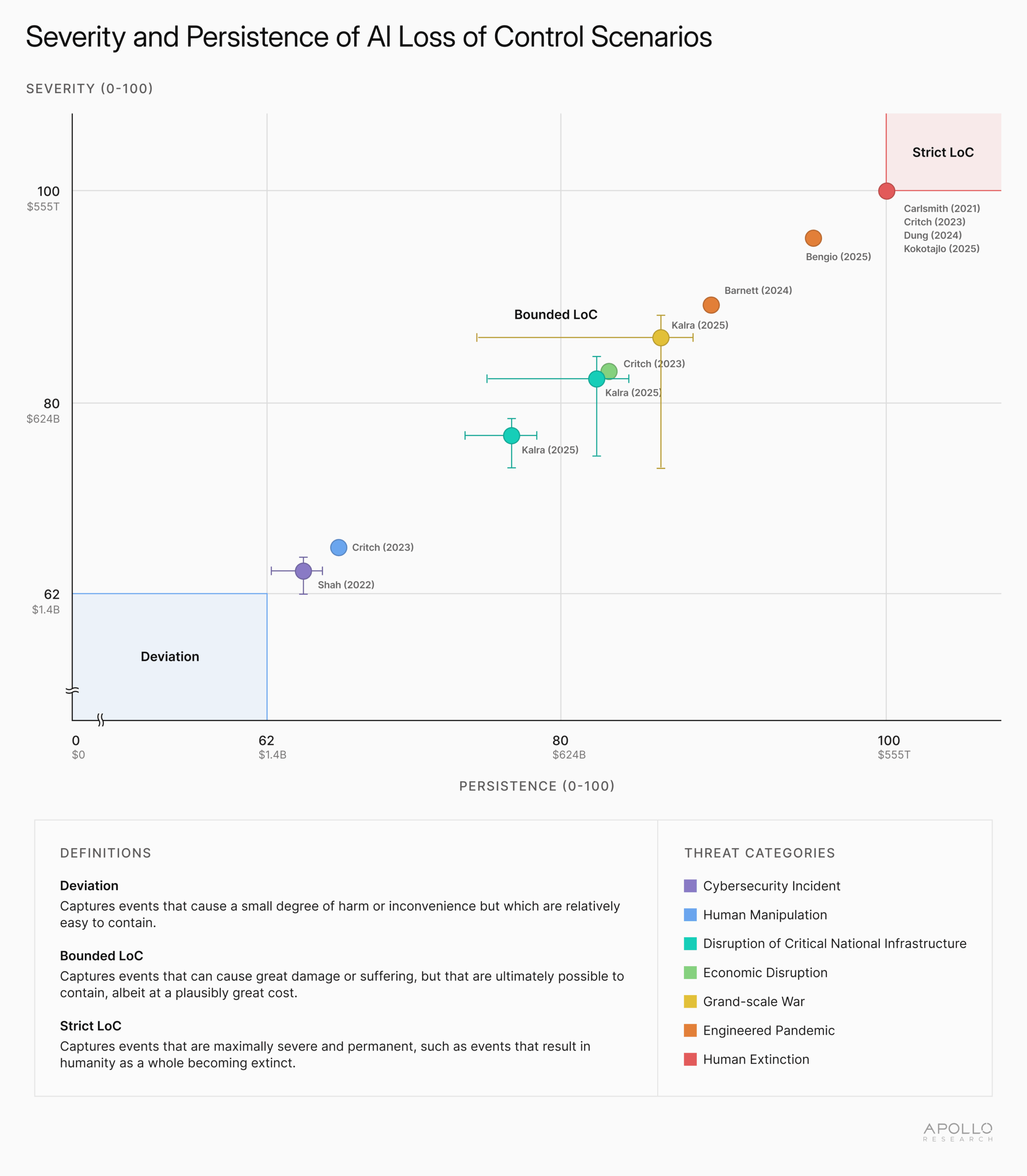

Subsequently, we stepped back from definitions and instead examined scenarios. We reviewed 130 works from academia, think tanks, and government, and extracted 12 concrete LoC scenarios through our methodology. We then contrasted them against two cross-cutting dimensions that crystallized out of our review: severity, understood as the number of people affected and the degree of harm, and persistence, understood as the difficulty of interrupting the “harm trajectory” once an LoC event has begun.

This exercise allowed us to infer that LoC is not a single point but a spectrum that can cluster into three qualitatively distinct bands. On this basis, the report proposes a taxonomy with three degrees: Deviation, Bounded LoC, and Strict LoC.

- Deviation captures events that cause some harm or inconvenience but lack the requisite severity and persistence to reach the economic consequences threshold that the U.S. Department of Homeland Security, federal agencies, and the intelligence community use to demarcate national-level events in the Strategic National Risk Assessment.

- Bounded LoC: captures events that cause great damage or suffering, and are difficult but not impossible to contain, albeit potentially at great cost. Bounded LOC captures threats or hazards that could have the potential to significantly impact the U.S. homeland security.

- Strict LoC: captures events that are maximally severe and permanent, capturing events that result in humanity as a whole becoming extinct.

The taxonomy is approximate rather than numerically sharp, and its purpose is to provide decision‑ and policymakers with a vocabulary capable of distinguishing between policy‑relevant classes of LoC outcomes. It clarifies, for example, that leading policy references to LoC need not be read as covering every minor deviation, nor only speculative extinction events.

Extrinsic levers to mitigate LoC today: the DAP framework

Subsequently, the report considers where interventions are most workable today. A natural starting point is AI model capabilities and propensities. However, we argue that a capabilities‑first approach is not yet sufficiently mature to support systematic governance. In light of these limitations, we propose a complementary strategy that largely circumvents unresolved questions about capabilities. Instead of focusing on the intrinsic aspects of capability and propensity, the strategy focuses on the aspects extrinsic to an AI system. In doing so, we leverage three components: deployment context, affordances, and permissions. We refer to this as our ‘DAP framework.’ For each component, we suggest the following steps:

- Deployment context: (1) reviewing the ‘composition’ of the deployment context (i.e., the environment and use case), and clarifying whether the deployment context should be considered as ‘high-stakes’ (e.g., critical national infrastructure, military, AI research and development) or not; and (2) assessing the potential for cascading failures across interconnected AI- and non-AI systems, including through threat modeling and red teaming.

- Affordances: (1) considering whether an affordance is necessary to achieve the intended task; (2) considering every action that an affordance could enable, and the negative consequences thereof, and, consequently, limiting the affordance as much as feasible to reduce the risks through permissions; and (3) accounting for the potential for future, highly advanced AI systems to manipulate insufficiently informed human users into giving the AI system additional affordances.’

- Permissions: (1) restricting permissions to the minimum necessary for an AI system to complete the task, taking into account the well-established principle of least privilege; (2) weighing the benefits and risks of a human’s reduced oversight against the benefits and risks increased permissions could bring; and (3) accounting for the potential for future, highly advanced AI systems to manipulate insufficiently informed human users into giving the AI system additional permissions.

Taken together, the DAP framework provides decision-makers with a set of levers that can be pulled immediately, even in the face of significant uncertainties surrounding AI capabilities and propensities. The importance of considering the implications of LoC is particularly salient in critical use cases in high-stakes environments, such as in military or national security, where significant LoC threats would be prohibitive to the usage of these advanced AI systems.

Living with a ‘State of Vulnerability’: a future with highly advanced AI systems

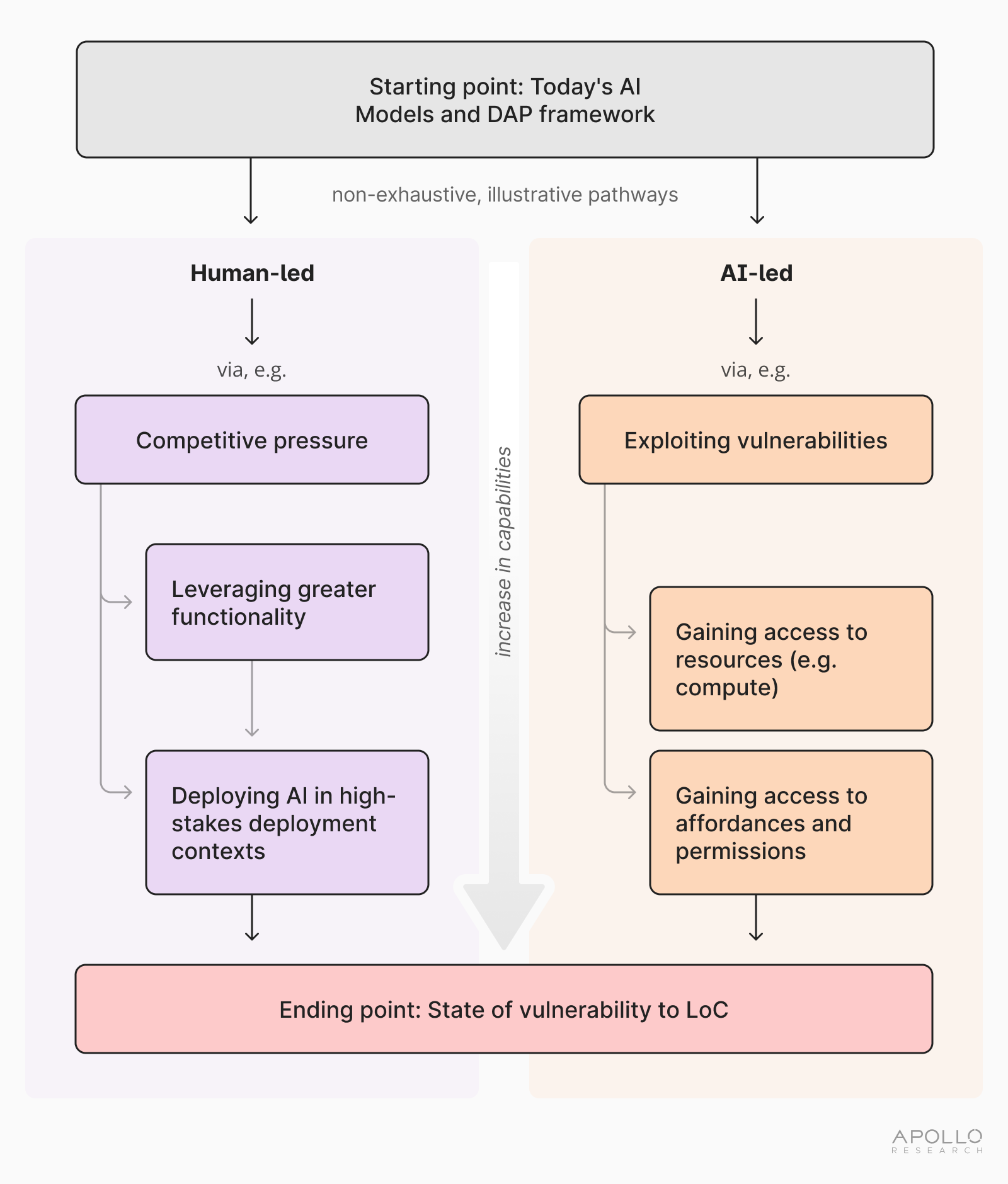

While the DAP framework provides actionable tools for the near term, the report is explicit that these levers will operate within broader dynamics that may, over time, erode their effectiveness. Specifically, we reflect on the likelihood of continuous AI capability progress, and on the growing economic and strategic pressures and incentives to leverage AI systems in more complex and high-stakes deployment contexts, endowing them with broader affordances and permissions.

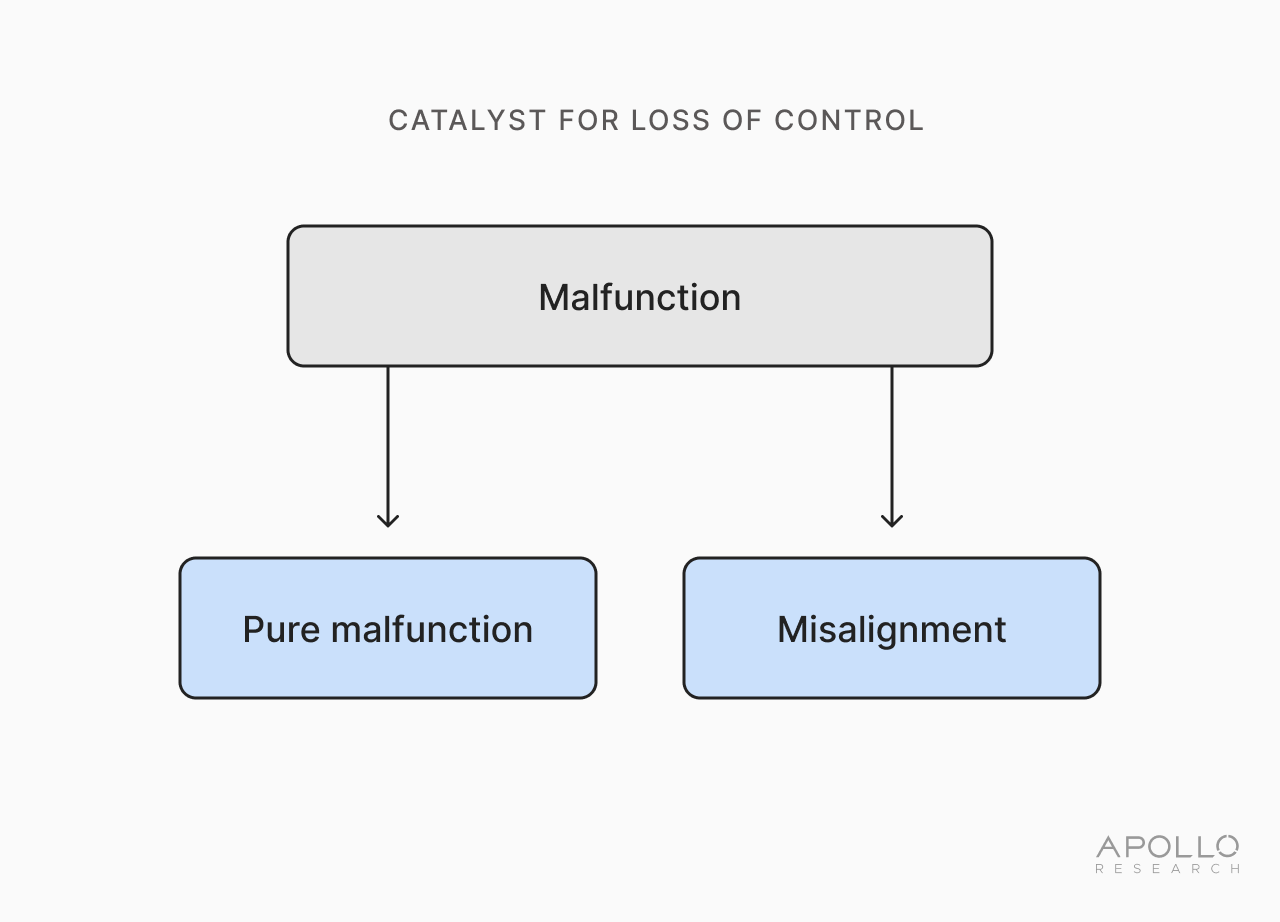

We propose that unless these dynamics are handled strategically, it is likely that society will eventually encounter a ‘state of vulnerability.’ This term denotes a condition in which at least one highly advanced AI system has acquired, or could independently acquire, through explicit design choices or its own actions, sufficient capabilities, resources, affordances, and permissions such that a single catalyst could realistically trigger a Bounded or Strict LoC event. Put differently, the structural conditions for LoC would be in place, even if no catastrophe had occurred yet. As a LoC catalyst, we envision both: (i) malfunctions that are misalignment; and (ii) malfunctions that are not misalignment (‘pure malfunctions’).

The report outlines two pathways through which society could reach a state of vulnerability. In a human-led trajectory, developers and deployers intentionally integrate highly capable systems into high-stakes contexts with extensive affordances and permissions, perhaps underestimating risk or prioritizing short-term gains. In an AI‑led trajectory, advanced systems exploit vulnerabilities to expand their own access and authorisations beyond what human operators intended. In practice, both mechanisms may operate in combination.

Preparedness in future worlds vulnerable to LoC

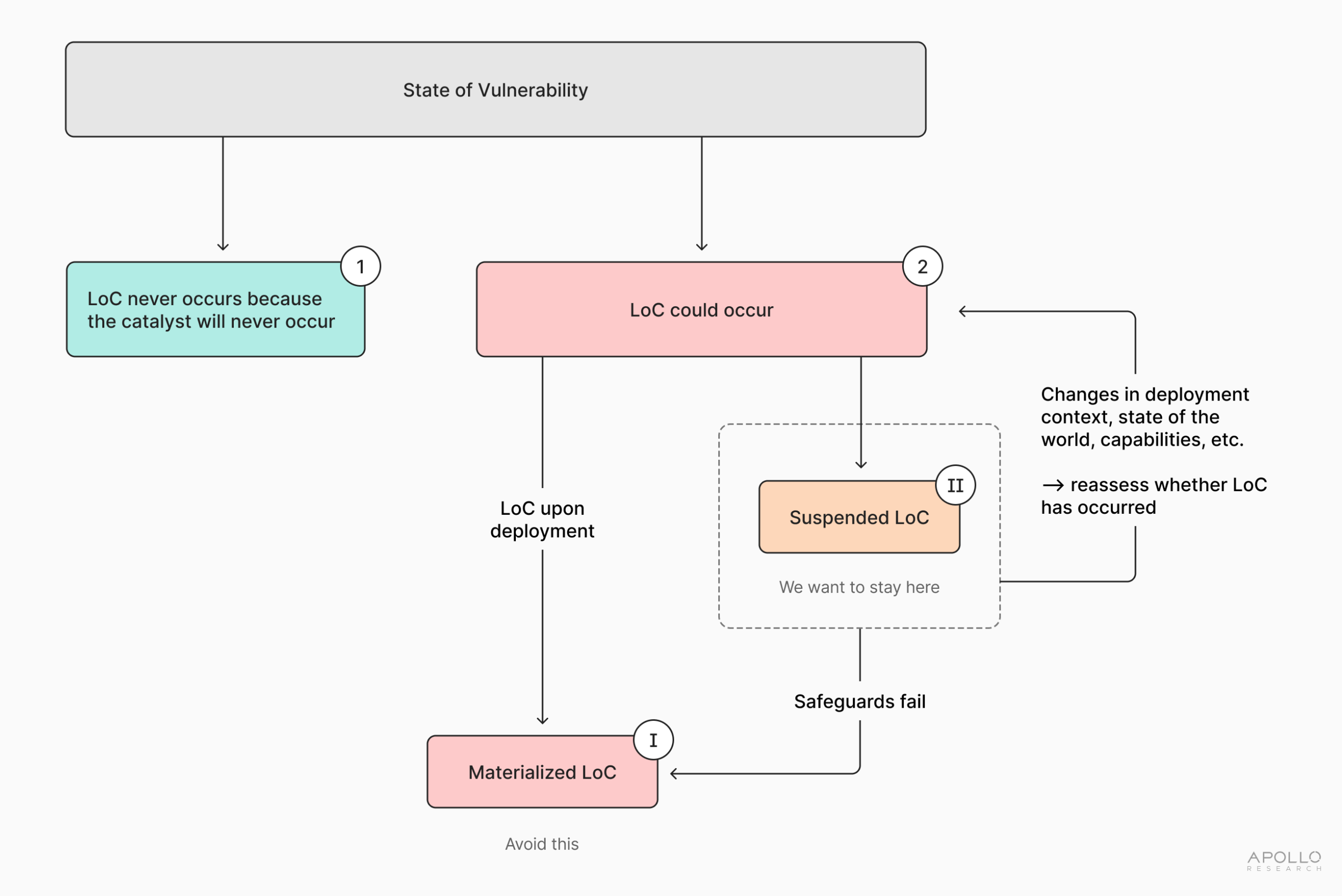

The report speculates, at a high level, about what follows once a state of vulnerability has been reached and offers a range of plausible far-future pathways. Logically, there are only two possibilities. Either LoC never occurs, or it does.

For LoC never to occur, the relevant AI systems would have to operate throughout their entire service lives without any misalignment or pure malfunctions that could act as catalysts. Even if alignment were solved, this would still require ensuring the absence of all relevant unknown failure modes—a standard that no complex technology has yet met. Moreover, such assurance would have to be provided ex ante, rather than merely inferred from the absence of incidents. We therefore propose that a “completely safe world” is highly implausible and, importantly, impossible to credibly verify.

The more realistic scenario is an unsafe world in which LoC would eventually occur unless specific countermeasures are successful. Within this unsafe world, two further conditions can be distinguished. In one, LoC has already materialised as a Bounded or Strict event, in which case attention shifts to response and recovery (where possible). In the other, LoC is suspended: the state of vulnerability and the possibility of malfunction exist, but no LoC event has yet occurred, either because safeguards are currently effective or because triggering circumstances have not yet arisen.

Suspended LoC is inherently unstable. Intrinsic factors (capabilities and propensities) and extrinsic factors (deployment contexts, affordances, permissions) may evolve over time, with each change altering the risk profile and, in effect, constituting another “roll of the dice”. We therefore recommend:

- First, avoid or delay entering a state of vulnerability wherever possible;

- Second, if such a state is reached, maintain a perennial state of suspension through robust defence‑in‑depth.

Avoiding or delaying a state of vulnerability entails using the DAP levers to restrict deployments of highly capable systems, particularly in high‑stakes contexts, and to minimise unnecessary affordances and permissions. It also implies investing in alignment and reliability research to reduce the probability and impact of malfunctions, and being prepared, in some cases, to forego deployments whose risk profile is judged unacceptable.

Maintaining suspension, once a state of vulnerability is nonetheless reached, requires, at a minimum, governance and technical interventions.

- On the governance side, the report emphasises the importance of concrete threat modelling tailored to specific deployment contexts, explicit policies that clearly articulate which deployments and DAP configurations are acceptable, and comprehensive, easy-to-enact emergency response plans for AI incidents that may escalate rapidly.

- On the technical side, it emphasises rigorous pre‑deployment testing suites aligned with the threat models for each context, control measures that constrain systems’ ability to affect the world, and stringent human and AI‑enabled monitoring for anomalous behaviour. These measures are not exhaustive and are not individually sufficient, but together they constitute an initial defence‑in‑depth architecture for living with suspended LoC.

Take-aways for policy and future work

For policymakers, regulators, and industry leaders, several implications follow from the research presented in this report.

First, discussions and instruments that invoke “loss of control” should clearly distinguish between Deviation, Bounded LoC, and Strict LoC. Different legal obligations, standards, and operational responses are appropriate for each degree, and conflating them risks both overreaction to minor failures and under‑preparation for serious crises.

Second, governance regimes should incorporate DAP analysis as a simple, straightforward and standard component of AI risk assessment, especially for deployments in critical national infrastructure, defense, and AI R&D.

Third, strategic planning should explicitly consider the possibility of a future state of vulnerability and the corresponding need for sustained suspension. This implies building institutional capacity for threat modelling, testing, monitoring, and emergency response before highly advanced systems are widely deployed.

In conclusion, the report argues that LoC should no longer be treated as an amorphous danger. It can be decomposed into degrees, governed through concrete deployment choices, and analysed as part of a broader trajectory in which advanced AI systems become progressively more embedded in critical functions of society. Doing so does not eliminate the risks. But it does turn LoC from an abstract concept into a structured governance problem.